[MM'23]3DStyle-Diffusion: Pursuing Fine-grained Text-driven 3D Stylization with 2D Diffusion Models

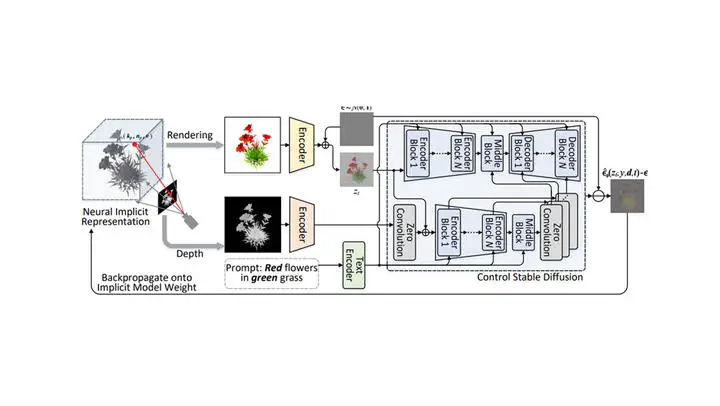

3D content creation via text-driven stylization has played a fundamental challenge to multimedia and graphics community. Recent advances of cross-modal foundation models (e.g., CLIP) have made this problem feasible. Those approaches commonly leverage CLIP to align the holistic semantics of stylized mesh with the given text prompt. Nevertheless, it is not trivial to enable more controllable stylization of fine-grained details in 3D meshes solely based on such semantic-level cross-modal supervision. In this work, we propose a new 3DStyle-Diffusion model that triggers fine-grained stylization of 3D meshes with additional controllable appearance and geometric guidance from 2D Diffusion models. Technically, 3DStyle-Diffusion first parameterizes the texture of 3D mesh into reflectance properties and scene lighting using implicit MLP networks. Meanwhile, an accurate depth map of each sampled view is achieved conditioned on 3D mesh. Then, 3DStyle-Diffusion leverages a pre-trained controllable 2D Diffusion model to guide the learning of rendered images, encouraging the synthesized image of each view semantically aligned with text prompt and geometrically consistent with depth map. This way elegantly integrates both image rendering via implicit MLP networks and diffusion process of image synthesis in an end-to-end fashion, enabling a high-quality fine-grained stylization of 3D meshes. We also build a new dataset derived from Objaverse and the evaluation protocol for this task. Through both qualitative and quantitative experiments, we validate the capability of our 3DStyle-Diffusion. Source code and data are available at https://github.com/yanghb22-fdu/3DStyle-Diffusion-Official.